Published on

This article was originally posted on HackerNoon.

ChatGPT, OpenAI's impressive chatbot, has fueled a leap in the global understanding of the potential of artificial intelligence (AI). AI and machine learning (ML) have impacted our daily lives for some time now, but ChatGPT is a visceral example of AI's abilities - and there is no wonder why it's garnered such attention.

And before you ask, no, this article wasn't written using ChatGPT.

ChatGPT and Software Development

Among the software development community, there has been particular attention to ChatGPT's ability to produce, from the most idiosyncratic requests, professional-level programming examples. This has also raised speculation on how AI may change the software industry and affect software engineering jobs.

Will everyday citizen developers be able to make deeper inroads into software development as no code/low code and AI become better and better? And will AI produce better and more secure code than developers are writing today?

Let's get down to the basics and demystify AI and ML.

Demystifying AI and ML

At a high level, AI is the process of taking an algorithm and feeding it with training data that enables the algorithm to recognize patterns and then apply the patterns in novel ways. For example, grammar tools used in Microsoft Word, Google Sheets, and Grammarly – are given examples of proper grammar and common grammar mistakes to look for when analyzing your writing; then, they provide you with feedback based on the patterns they see (or don't see).

Read More: 4 Main Types Of Artificial Intelligence

The most critical factor in producing good AI is having good training data. AI development is almost as old as software engineering. Still, the giant leap we've seen recently can be attributed to the ease of acquiring massive computing power and the ability to consume gigantic amounts of training data freely available on the internet.

ChatGPT is trained on vast amounts of text data from a variety of sources, such as books, websites, and social media. This enables the model to generate human-like responses to a wide range of questions and prompts, but it also means that its responses can be influenced by the biases and inaccuracies present in the training data.

The Dangers of Secure Coding With AI

There are times when ChatGPT will confidently give the wrong answer. Unless you can vet these responses, using ChatGPT may represent a risk to your organization.

GitHub Copilot is an AI-based tool that makes code suggestions while software developers are actively writing code. GitHub Copilot was trained using the massive collection of software stored in GitHub's repositories. Like ChatGPT, it can produce impressive results.

But not always.

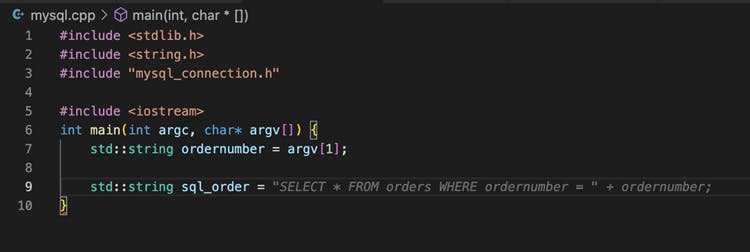

My day job requires me to produce simple code examples that can be used to train software developers on secure coding practices. While creating one of these examples, GitHub Copilot suggested the following code (the suggested code is in the gray text)

[1]:

Producing a correct, secure example code requires a lot of thinking on the part of the AI—it would have to understand the library that would be used to communicate with the database, and it would have to understand the intentions of the code. Both are heavy lifts for AI, and I'm not surprised it didn't make a better suggestion.

That's why it's paramount to understand you need someone to vet the suggestion and reject those suggestions that introduce insecure code.

Properly trained developers still stand as the best line of defense for ensuring that code is secure and architectures are hardened against attacks. Tools like GitHub Copilot and ChatGPT show much promise in making developers more efficient and may reduce insecure code. However, like the promise of self-driving cars, we are probably still decades away from this reality.

A multi-tiered approach to protecting your organization from security attacks is still the best practice today. The best approach to minimizing exposure to attacks includes combining:

- A well-educated staff

- A culture that values security

- The use of automated tools (static and dynamic analysis tools, for example)

- A security-first software development lifecycle

One confession, paragraph six was written by ChatGPT.

[1] Since I do often write insecure code for the sake of bad coding examples, it's quite possible that I've trained GitHub Copilot on this bad habit. But I believe the point still stands.